How to build a Deep Learning system that will answer questions about the Harry Potter universe?

Riva is a set of APIs into a very complex, very well staffed AI research organization.

This blog post was originally published on Medium on 08/06/2021.

To achieve our goal, which is to build a Q&A system that will answer questions about the Harry Potter universe, we will use NVIDIA Riva and combine it with a pretrained model by HuggingFace.

What is NVIDIA Riva?

In essence, it is a set of APIs that give you access to cutting edge NLP techniques both for text and speech.

It features a lot of pretrained models and a built-in mechanism for serving.

Why do I care about Riva?

I love wearing the researcher hat, where I am learning about a problem and coming up with a solution.

I equally enjoy being a student — reading Arxiv papers, listening to talks, etc.

But at some point, you want to start delivering business value. Be that as an employee, a freelancer, or when hacking on your side project.

In that framing, Riva is a set of APIs into a very complex, very well staffed AI research organization. What NVIDIA did with Riva, is they captured all the expensive expertise, a lot of resources that go into training models, packaged the results, and made them available to us for free.

These are exciting times because what we are starting to see is exactly what will lead to the mass adoption of AI. For certain use cases, you don’t have to staff an elaborate AI department anymore. Instead, you can tap into a lot of that expertise encapsulated as API calls for free.

This gives enormous power to create and build things to people who would lack the resources or know-how to do so otherwise.

What will our application do?

My daughter is a big Harry Potter fan and she wants to know everything about the world he inhabits! What were the names of the wizards who founded the four houses at Hogwarts? What does the spell by this or that name do? So many questions!

Let’s build an application that can help us answer some of these questions!

In order to do so, we will combine a HuggingFace model for text embedding and the Riva Q&A API.

In this article, I will explain what the main components are, but if you would prefer to follow along while looking at the code, you can find it here. Please note how little code I had to write! The most important components come prepackaged.

Lifting the curtain on the application

First, we will need to represent the paragraphs from the Harry Potter book as vectors (essentially, just a bunch of numbers). The process in which we go from a piece of text to a vector is called embedding. The hope is that the function that embeds the text — in our case, the function is a HuggingFace model — will capture some semantic information about the input. Ideally, two similar passages should produce embeddings that are in some way alike.

When we submit a question, it will also get embedded using the same model. We can then compare the embeddings of all the paragraphs with the embedding for the question and seek out the paragraphs that seem to be most relevant.

The part that we have covered thus far is called Information Retrieval. It is an active area of research and there are many ways one could go about looking up relevant information. Thanks to HuggingFace, we have access to one of the most powerful methods with complete ease!

So why all the effort thus far? The Q&A API takes in a pair of a question and a context — what we have done thus far is we devised a way of going from a question to a relevant piece of text that hopefully will hold the answer.

The next step is sending both the question and the context to the Riva inference server, and back comes the answer!

The purist in me cannot help to observe that in some sense we are cheating here. We are using a Deep Learning model that we run locally and are shipping the output to our inference server. This is not what we would want to do in production. In production, we would want all the magic happening on the inference server. The reason for this is that the Triton inference server can handle many requests asynchronously and is blazingly fast. Secondly, from a development and scaling perspective, you want to be talking to endpoints that handle the heavy lifting, you probably don’t want to put a GPU into every web server that you spin up, you want that expensive resource that can be only used for a specific purpose to be centralized.

NVIDIA might soon release a text embedding model, but for now, we have to do what we have to do! Bear in mind that other applications (or doing Information Retrieval in some other fashion) might not require running the model locally and such a scenario would be one where the Triton inference server could really shine!

The results

So how did it all work?

Miraculously, it works 🙂 This comes as a surprise as going from unstructured, complex text such as the prose in a novel to answering questions based on it seems like a tall order.

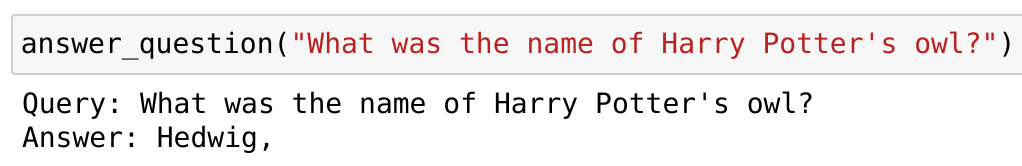

The model can answer straightforward questions.

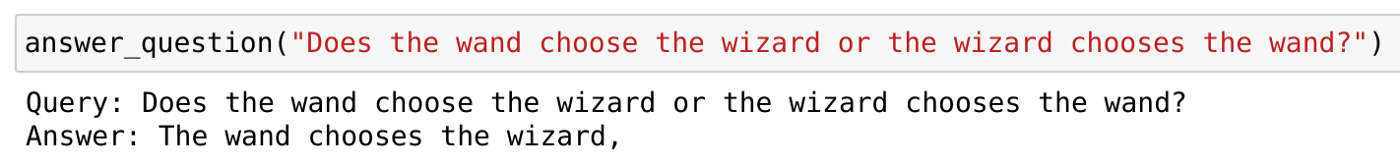

But it also can go into the more esoteric realm of abstractions.

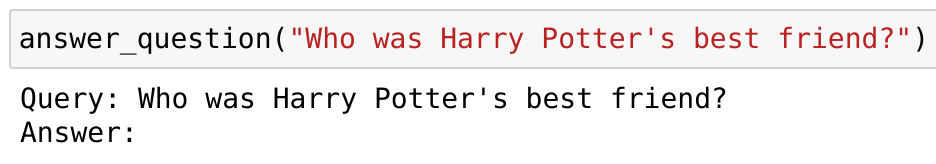

The situation is not perfect though. For many seemingly simple questions we will often not get an answer at all (that might be a good thing though — if the Q&A API is uncertain of the answer, it will choose to not produce one, rather than return something nonsensical).

Having played around with this setup, I suspect the culprit is the text that is provided as context. Novels contain a lot of information, but very little of it is given in a declarative, straightforward way. The Q&A API would have a much easier time if we fed it articles from Wikipedia, or blog posts on a given topic.

Nonetheless, the performance remains very impressive. Riva is still in beta and it will be very interesting to see what people will be building with it, once more and more developers learn of its existence.