Use ChatGPT inside Jupyter Notebook

Bringing the new tool as close as possible to where people already do their work is key.

AI won't take your job, but someone using AI will.

We are at an inflection point where Large Language Models are turning into powerful productivity tools.

It is very to think why that is.

Who would have foreseen that next-word prediction accuracy could be a good proxy for the fidelity of the world model that an LLM constructs?

Or that new abilities would emerge in LLMs through scaling?

These are fascinating subjects and I highly encourage you to read a summary Twitter thread I wrote on a conversation between Jensen Huang (CEO of NVIDIA) and Ilya Sutskever (co-founder and Chief Scientist at OpenAI) that touches on many of these topics.

But these considerations aside, the question becomes: how do we incorporate these new tools into our work?

Personally, I haven't found incorporating them into my workflow easy.

But we can use the same tactics companies use to help with the adoption of their products.

And a major one that stands out among them is reducing friction. Bringing the new tool as close as possible to where people already do their work is key.

And that is how ask_ai was born.

What is ask_ai?

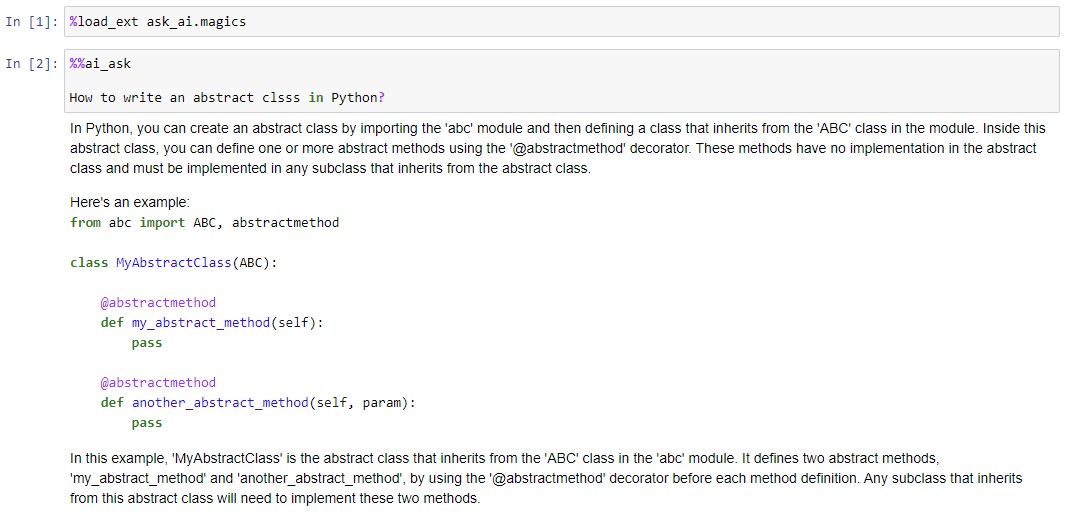

Ask_ai is a Jupyter Notebook magic that allows you to talk to the OpenAI API from within Jupyter Notebook.

All you have to do is set the OPENAI_API_KEY environment variable to your API key, load the extension from Jupyter Notebook %load_ext ask_ai.magics, and are off to the races!

What can ask_ai do?

You can use it to ask questions (just like you would talking to ChatGPT via OpenAi's interface)

By default, the gpt-3.5-turbo model is used.

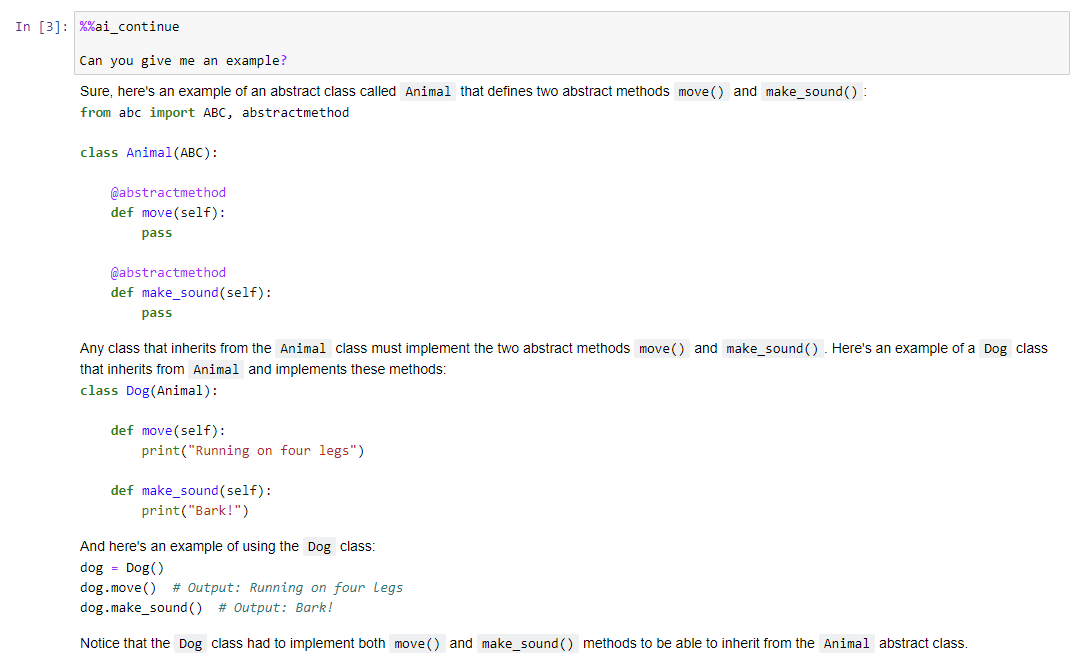

You can start a new conversation using %%ai_ask or continue the previous one using %%ai_continue.

In the latter case, the previous context will be sent along with whatever you type.

Since gpt-3.5-turbo has a context of 4096 tokens at some point your conversation history might exceed that limit.

In such a case, the earlier conversation will be trimmed to ensure the model receives the most recent messages.

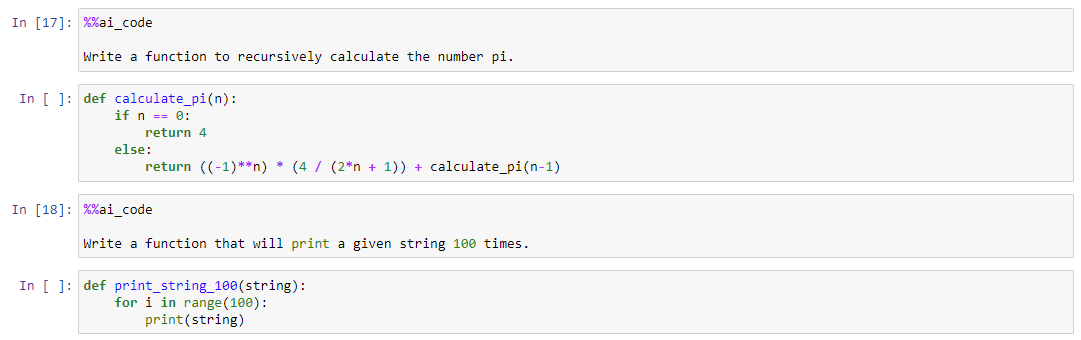

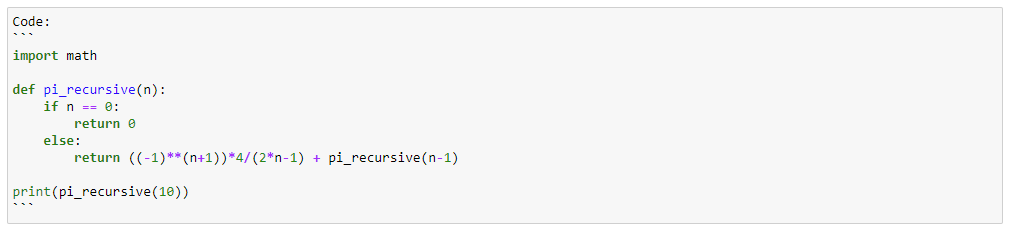

Additionally, you can ask ask_ai to write code for you!

Sometimes, extraneous lines will be returned but they can be easily deleted.

This issue could be easily solved with a bit of regex magic, but I decided to keep things as they are.

I mostly treat this project as a learning opportunity and there is value in understanding what the model is doing.

Plus, once the GPT4 API becomes available, this problem will likely go away (GPT4 is said to much more strongly adhere to the system prompt vs the earlier versions).

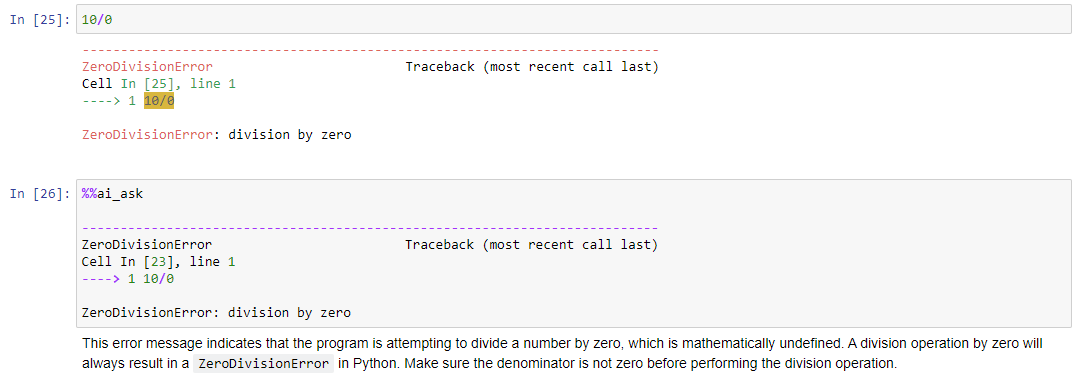

BONUS: The way Reinforcement Learning from Human Feedback (RLHF) was performed by OpenAI lends itself really well to troubleshooting code issues!

All you have to do is send your error message and the OpenAI model will be able to take it from there:

What's next?

This was just a small project for me to explore the OpenAI API and to make it easier for me to start using GPT LLMs in my work!

Maybe this can be useful to you too 🙂 You can find the project on GitHub here.

The code has been developed using nbdev so it is notebooks all the way down!